- Research

- Open access

- Published:

Hierarchical semantic segmentation of image scene with object labeling

EURASIP Journal on Image and Video Processing volume 2018, Article number: 15 (2018)

Abstract

Semantic segmentation of an image scene provides semantic information of image regions while less information of objects. In this paper, we propose a method of hierarchical semantic segmentation, including scene level and object level, which aims at labeling both scene regions and objects in an image. In the scene level, we use a feature-based MRF model to recognize the scene categories. The raw probability for each category is predicted via a one-vs-all classification mode. The features and raw probability of superpixels are embedded into the MRF model. With the graph-cut inference, we get the raw scene-level labeling result. In the object level, we use a constraint-based geodesic propagation to get object segmentation. The category and appearance features are utilized as the prior constraints to guide the direction of object label propagation. In this hierarchical model, the scene-level labeling and the object-level labeling have a mutual relationship, which regions and objects are optimized interactively. The experimental results on two datasets show the well performance of our method.

1 Introduction

Semantic segmentation is a fundamental task in computer vision, which is a basic work for many applications, such as image editing, image-based modeling and autonomous driving [1–3]. The typical approaches of semantic segmentation include the parametric ones [4–8] and the nonparametric ones [9–13], which both achieve promising performance. Previous works focus on assigning a unique category label to each pixel correctly, generating region segments with semantic information. However, these segments have little information of objects in the scene. Specifically, all the objects from the same category are considered as a whole object, thus making it difficult to distinguish different instances. For clarity, we use the same object definition as that used in [14, 15], in order to differentiate from material. The objects are better characterized by overall shape than local appearance, while material categories have no consistent shape but fairly consistent texture.

Besides the typical semantic segmentation approaches, many approaches aim at the accuracy improvement of object segment, such as the interactive segmentation [16–18] and the co-segmentation [19–21]. In these works, the prior of latent object is provided with either the user scribbles or the object coherency to generate exact segments, while these segments usually have no semantic information of objects.

In fact, the details of objects are useful for precise understanding of the image scene. For example, in Fig. 1, the scene-level semantic labeling tells us that this image shows person and horse in a natural scene. The object-level semantic labeling gives us more details about the scene, such as the numbers of person and horse, and the layouts of each person and horse. With these details, we can even infer that this scene may be a snapshot of a polo game. Therefore, object details are effective for precise scene parsing, and should be predicted accurately. Considering the diversity of objects in texture, shape and pose, object labeling is still a challenge problem, though there have been several approaches dealing with this problem [1, 15, 22, 23].

In this paper, we propose a hierarchical semantic segmentation method which aims at understanding both scene regions and objects. Under the assumption that the scene-level and the object-level labeling are stimulative for each other, we first get a scene-level labeling via a feature-based MRF, and then utilize the category prior as well as the appearance features to improve the labeling of object. We give a definition of object labeling which is similar to that of semantic labeling, i.e., assigning a unique object label to each pixel. Then a constraint-based geodesic propagation algorithm is proposed to achieve object segments.

The main contributions of this paper include the following: (1) A multi-level semantic labeling framework is proposed for understanding of both regions and objects; (2) A constraint-based geodesic propagation algorithm is introduced for object labeling. The rest of this paper is organized as follows. A precise overview is described in Section 2.1. Then Sections 2.2 and 2.3 give the details of scene-level and object-level labeling respectively. We show our experimental results in Section 3 and give a brief conclusion in Section 4.

2 Methods

2.1 Hierarchical model overview

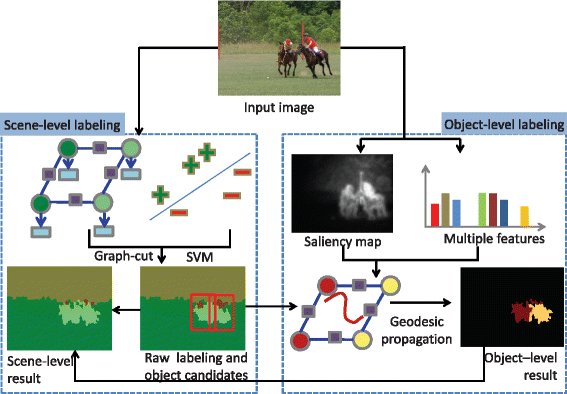

The framework of our hierarchical model includes scene-level labeling and object-level labeling. The overview is illustrated in Fig. 2.

In the scene-level labeling, we use a feature-based MRF model to recognize categories in a scene. To make the labeling more efficiently, we over-segment the image into a set of superpixels using turbopixel algorithm [24]. On the over-segmented image, pixel-wise features are mapped into a feature vector of the corresponding superpixel, including filter responses, boundary features, pyramids of HOG, and RGB colors. We utilize a one-vs-all classification mode to get the raw probability for each category. The raw probability and features are embedded into the MRF model as unary potential and binary potential respectively. With the graph-cut inference, we get the raw scene-level labeling result. Besides, we conduct object detection with SVM algorithm, predicting object candidates. The number of instances is identified based on the raw probability and object candidates. The final scene-level labeling is adjusted with the object-level labeling, generating a more precise scene-level result.

In the object-level labeling, we conduct saliency detection to get the saliency map. The region of interest (ROI) for objects is obtained based on the saliency map and the raw probability map. A graph model is formulated over this ROI, of which a node denotes one superpixel and an edge denotes the adjacency of superpixels. The weights on edges are computed from multi-dimension features of each superpixel, including the HOG descriptor, texture descriptor, Lab colors, and gradient features. These features are different from those used for scene-level labeling. The weights on nodes consist of the saliency confidence and the raw probability, which are mapped into geodesic distance. We conduct geodesic propagation on the graph model. In each step of propagation, a node with the smallest geodesic distance is selected as the seed node. We fix the label of this seed and update the status of its neighbors for next propagation step. When all the nodes are fixed, we get the object labeling result.

2.2 Scene labeling

2.2.1 Features for recognition

We utilize a one-vs-all mode to train the classifier for each category with dense samples from the training images. The feature vector fv(i) of superpixel i consists of filter response features [4], boundary features [25], pyramids of HOG [26], and RGB color features. The filter response, boundary and color features are sampled for each pixel, and mapped to superpixel level by averaging pixels over each superpixel. The pyramids of HOG are computed over a patch region of each superpixel. In testing, the classifiers learned by the Joint Boosting algorithm [4] generate the initial raw probabilities of each category l for i, which is denoted as P(i,l).

2.2.2 MRF model

The objective of scene-level labeling is to assign each superpixel i a category label l i from fixed category label set L. The energy E(L) of our MRF model over all superpixels is defined as follows:

The unary term ψ(l i ) measures the cost of assigning label l i to superpixel i, and the pairwise term ϕ ij (l i ,l j ) measures the penalty of different assignment between similar adjacent superpixels i and j. Our unary term is an exponential form of the normalized raw probabilities, i.e. ψ(l i )=exp(−P(i,l)). Our binary term is computed as exp(−z∗(∥fv(i)−fv(j)∥)2),where z is a normalization parameter.

We use graph-cut algorithm [27] to get the scene-level labeling result. The region of object category can be refined with the following object-level labeling.

2.2.3 Recognition for objects

It is a challenge to identify object number accurately in the scene. We first perform object detection to get a raw estimation of object candidates. The detectors are learned by SVM algorithm, proposing a set of object hypothesis {H}, in which each h∈{H} has a bounding box and a score. Then, we rank these hypotheses according to their scores and prune the hypotheses whose scores are lower than threshold T h . The rest hypotheses in the pruned {H} are the object candidates that need to be labeled. In our implementation, T h is learned on the training images with their bounding boxes. We estimate the score distribution with 95% confidence interval. Theoretically, T h should be the value which meets over 95% true positive predictions, i.e., over 95% bounding boxes whose scores are higher than T h are true positive. Considering the outliers of bounding box in testing, however, we broaden this restriction and actually select T h as the value which meets over 85% true positive predictions, expecting to reduce the false negative predictions.

In practice, when T h can not work well for a specific image, for example, if the pruned {H} has no object candidates or much more object candidates than common amount, then we adjust the number of candidates experimentally.

2.3 Object labeling

The objective of object-level labeling is to assign each pixel a unique object label. The scene-level labeling gives a rough region of object category. We perform object labeling on such region instead of the whole image. In this section, we start with how our ROI region is identified.

2.3.1 ROI region

Objects in an image scene usually attract more attentions of a human being than materials; therefore, we assume that saliency detection may predict valid object region. Some approaches have utilized saliency information for object segmentation [28, 29]. In our implementation, we utilize the algorithm of Goferman et al. [28] to get a down-sampling output saliency map, and then up-sampling output to the original size of an image. An example of saliency map is shown in Fig. 3.

For a specific category C, the ROI region of its instances is supposed to include (1) superpixels whose probabilities of C are higher than other categories, (2) superpixels whose probabilities of C are higher than a threshold T p , and (3) superpixels whose saliency values are higher than a threshold T s . The thresholds T p and T s are estimated in the similar way to T h . We estimate the distributions of raw probability and saliency respectively on training images. Then the values which meet over 85% superpixels are selected as T p and T s respectively.

2.3.2 Feature weights on graph

Once the ROI is identified, a graph model is then formulated, where each node denotes one superpixel in the ROI and each edge denotes the adjacency of superpixels. The weights on graph consist of appearance features, including the HOG descriptor, texture descriptor, Lab colors, and gradient features. The first three types of features are embedded in the weights of nodes, and the gradient features are embedded in the weights of edges. These features are different from the fv used for scene labeling. We observe experimentally that, for object labeling, color features in Lab space perform better than that in RGB space. We leverage these features as the prior constraints. Each kind of feature is described in a bag of words style, as did in [30]. A pixel-level HOG spatial pyramid is constructed with 8 ×8 blocks, 4 pixel step size, and 2 scales per octave. These HOG features are concatenated into a one-dimensional vector. We cluster these features to 1000 kmeans centers, resulting in a HOG descriptor. The pixel-level texture features are extracted with a Gaussian filterbank and quantized to nearest 256 kmeans centers. The histogram of 256 bins is used as the texture descriptor. In Lab color space, the color features are densely sampled and quantized to the nearest 128 kmeans centers. The gradient features which reflect the boundaries of objects are used as propagation constraint, including both horizontal and vertical gradients. All these pixel-level features are mapped to superpixel level. The HOG, texture and color features are encoded as the weight difference D(i,j) in a linear combination, as shown in Eq. 2.

where i and j denote the adjacent nodes, F hog ,F tex , and F color indicate the HOG, texture, and color features. In our implementation, we set λ1 0.1, λ2 0.3, and λ3 0.6 experimentally.

2.3.3 Geodesic propagation

The geodesic propagation is considered to be valid for semantic labeling [12, 31]. We follow the definition of geodesic distance in [12, 31], while modify the details of implementation.

In the graph model for geodesic propagation, the weight on node is computed as the geodesic distance, and the weight on edge is the difference cost. The object labels propagate iteratively throughout all the superpixels in the ROI. If the raw probability of node i for category C is higher than T p and the saliency value of i is higher than T s as well, The weight on node i consists of the probability, the saliency and the bounding box score. Otherwise, the weight on node i consists of the probability of other category and the negation value of saliency, as shown in Eq. 3.

where Op(i,o) denotes the probability of each node i belong to the object o, P(i,C) is raw probability of category C, S(i) is the saliency value of i. ib(i,o) indicates whether i is inside of the scope of o, ib(i,o)∈{0,1}. B(o) is the detected bounding box score of o.

The weights on nodes are normalized and converted to initial geodesic distances, as shown in Eq. 4. The geodesic distances have inverse proportion to weights, i.e., a node with a higher weight has a shorter distance.

At the beginning of propagation, all the nodes have the status unlabeled. In each step of propagation, the node with the shortest geodesic distance among all labels of all nodes is selected as the current seed s. The related object label l of this distance is identified as the final label of s, thus the object label of s is determined. Then the node s has an updated status labeled, and will not be considered in the following propagation. Next, the unlabeled neighbors of s are prepared for the update of their geodesic distances. As shown in Eq. 5, if D(s,j) is lower than threshold T1 and the gradient difference bdry(s,j) between s and its neighbor j is lower than threshold T2, then the weight on edge W e (s,j) is equal to bdry(s,j), else it is a combination of D(s,j) and bdry(s,j). λ d and λ b are set to 0.2 and 0.8 experimentally.

If the sum of geoDis(s,o) and W e (s,j) is shorter than the previous distance geoDis(j,o), we update geoDis(j,o) with the new distance, else we maintain the geoDis(j,o) unchanged.

3 Results and discussion

3.1 Dataset and experimental setup

To evaluate the performance of our method, we use the public datasets Polo [13, 15] and TUD [32, 33].

Polo dataset. This dataset contains 317 polo scene images, including 6 categories, i.e., sky, grass, person, horse, ground, and tree. We split these 317 images into 80 training images and 237 testing images, as did in [13, 15]. The horse and person category are the object categories, and the others are material categories. The 80 training images contain 208 horse instances and the 237 testing images contain over 500 instances of different poses, appearances, and scales. Each image in this dataset contains one or more than one object instances, some of which have occlusions. Therefore, this dataset is applicable to test the performance of object labeling.

In this dataset, the category annotation map of each image is provided, while the annotations of object instances are not given. In order to evaluate our method quantitatively, we need to annotate the groundtruth of object instances. For an object category, to make our object annotation fit with the category region of provided annotation, we develop an annotation tool which tailors our annotation to its category boundary. In this way, pixels outside our object annotation are considered as void. In addition, we also annotate the bounding boxes for the training of object detection.

The label maps of category and object are shown in Fig. 4b, c respectively. In (b), different colors indicate different categories. In (c), different colors indicate different instances. Black indicates void in both category and object label maps. We order the instances in a scene by their layouts from left to right. The first instance is visualized in red, and the second in yellow, etc. Subfigure (d) shows the annotated bounding box of each instance.

TUD dataset. This dataset is priviously used for tracking by detection. It provides 201 images from a pedestrian sequence with 1216 tight bounding boxes and instance annotations of the pedestrians. Most of the pedestrians are side-view poses and many are partially occluded in the whole sequence. Pedestrians with at least 50% visibility are annotated in this dataset. We randomly split 100 images for training and the other 101 images for testing. In this dataset, the category annotation maps are not provided, thus we annotate the categories manually, including ground, person, building, and car. Besides, we make the annotation of person category fit with the given instance annotations. As illustrated in Fig. 5, the colors in (b) indicate the category labels and the colors in (c) and (d) indicate instances.

Baseline methods. We adopt several baseline methods for quantitative comparison. For scene-level labeling comparison, the baseline is referred to that of Shotton et al. [4], which is the typical method in scene-level manner. For object-level labeling comparison, the baseline methods include E-SVM and HV+GC, similar to that of [15]. E-SVM generates segmentation by transferring template masks to the detected objects. HV+GC gets the instance segmentation by performing GrabCut on the voted hypotheses.

Running time. The average resolution per image is roughly 500*350 pixels for Polo dataset, and 640 ×480 pixels for TUD. Our implementation of Matlab code takes 5 min for learning per image, and less than 1 min for labeling of scene-level and object-level on a desktop with a 3.2-GHz Intel i5 CPU and a 12-Gb memory.

3.2 Scene labeling results

For the Polo dataset, we select 10 sample training images from the training set to learn our object detector. These sample images include object instances with variation in scale, pose, and occlusion. The other 70 images of training set are used as validation. The learned detector is performed on each test image, generating multiple bounding box predictions, which are denoted as {H}. We prune {H} to include up to 7 objects, with T h equal to 0.35 experimentally.

For the TUD dataset, we also select 10 sample images from the training set. Images in this dataset have some similarities since they are from the same tracking sequence. To avoid overfitting, we take a validation with a subset of training images instead of the whole training set. The {H} is pruned to include up to 10 objects.

We modify the framework of TextonBoost [4] with 500 round traning times to generate the raw category probability. In the graph-cut optimization, we set the configuration of 1000 times iteration.

We use two metrics for scene-level labeling comparison, i.e., total accuracy and average accuracy. The total accuracy is the overall accuracy per pixel, and the average accuracy is the average accuracy per category. Tables 1 and 2 show the comparisons on Polo and TUD datasets. As we can see from these tables, our method performs better than that of Shotton et al. [4] in both total and average accuracy, especially the average one. The reason is that our method performs well for both material and object categories, while theirs is good at the material categories but poor at the object categories. Figures 6 and 7 show some examples of our scene-level labeling result on Polo and TUD datasets respectively.

Figure 8 shows the raw labeling and the final labeling of our method. The raw labeling is the initialization of probabilities. The final labeling is fine-tuned with our object labeling result. Comparing these two results, our method improves the overall accuracy as well as the object segmentation. See the figure for details.

3.3 Object labeling results

The accuracy of object labeling is different from that of semantic labeling. For example, assigning a wrong category label to a pixel will make an inaccurate understanding of scene; however, assigning a wrong object label to a pixel will not change the fact that it is an object of given category, as the purpose of object labeling is to partition the multiple instances of the same category.

Therefore, we use different criteria for object labeling. We calculate four evaluation metrics of (1) pixel-wise precision rate per object averaged over all object predictions (Mi-AP), (2) pixel-wise recall rate per object of groundtruth (Mi-AR), (3) pixel-wise precision rate over all pixels (Ma-AP), and (4) pixel-wise recall rate over all pixels (Ma-AR).

To compare quantitatively with the groundtruth, we need to search the matching pairs between the prediction and the annotation. We reorder the segments of each instance from left to right, thus the matching pairs can be found efficiently. The comparisons of object labeling on Polo and TUD are listed in Tables 3 and 4. According to these tables, our method performs better than that of He and Gould [15] in the four metrics. Besides, we are better than the baselines of E-SVM and HV+GC, except in the Ma-AP of the Polo dataset.

In our experiment, the threshold T p and T s are 0.3 and 0.45, T1 and T2 are 0.85 and 0.5 for the Polo dataset. For TUD dataset, these parameters are 0.5, 0.2, 0.85, and 0.8 respectively. Some examples of object labeling result are shown in Figs. 9 and 10. These examples include multiple instances in different scales, poses, and even occlusions. Different instances are visualized in different colors and the region of non-object is visualized as void.

4 Conclusions

In this paper, we propose a hierarchical semantic segmentation method of both scene-level and object-level labeling. The two levels work together to give a more accurate understanding of an image scene. In the scene-level, we use a feature-based MRF model to recognize the categories. In the object-level, we use a constraint-based geodesic propagation to segment each instance. The experimental results show the good performance of our framework. However, a most important prior for object segmentation is not used explicitly in this work, i.e., shape information. Therefore, in future, we attempt to utilize the shape prior to improve the accuracy. We are going to set up a new dataset of our own for object labeling, which includes much more object instances than the two datasets we used in this work. In future, we will evaluate and improve our method and conduct many comparison experiments on our dataset.

Besides, we will utilize more discriminative features to segment object instances, such as the features captured by convolutional neural networks. Our method can be applied to autonomous driving systems, robots, etc. Considering the computational complexity, we may refer to some parallel methods [34, 35].

References

Z Zhang, S Fidler, R Urtasun, in IEEE Conference on Computer Vision and Pattern Recognition(CVPR). Instance-Level Segmentation for Autonomous Driving with Deep Densely Connected MRFs (IEEE Computer SocietyLas Vegas, 2016).

C Yan, H Xie, D Yang, et al, Supervised hash coding with deep neural network for environment perception of intelligent vehicles.IEEE Trans. Intelligent Transportation Systems. 19(1), 284–295 (2018).

C Yan, H Xie, S Liu, J Yin, Y Zhang, Q Dai, Effective uyghur language text detection in complex background images for traffic prompt identification.IEEE Trans. Intelligent Transportation Systems. 19(1), 220–229 (2018).

J Shotton, J Winn, C Rother, et al, Textonboost for image understanding: Multi-class object recognition and segmentation by jointly modeling texture, layout, and context. Int. J. Comput. Vis.81(1), 2–23 (2009).

J Xiao, L Quan, in IEEE Int. Conf. Computer Vision. Multiple view semantic segmentation for street view images (IEEE Computer SocietyKyoto, 2009), pp. 686–693.

J Yao, S Fidler, R Urtasun, in The IEEE Conference on Computer Vision and Pattern Recognition. Describing the scene as a whole: Joint object detection, scene classification and semantic segmentation (IEEE Computer SocietyProvidence, 2012), pp. 702–709.

X Ren, L Bo, D Fox, in the IEEE Conference on Computer Vision and Pattern Recognition. RGB-(D) scene labeling: Features and algorithms (IEEE Computer SocietyProvidence, 2012), pp. 2759–2766.

J Tighe, M Niethammer, S Lazebnik, in The IEEE Conference on Computer Vision and Pattern Recognition. Scene parsing with object instances and occlusion ordering (IEEE Computer SocietyColumbus, 2014), pp. 3748–3755.

C Liu, J Yuen, A Torralba, in Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. Nonparametric scene parsing: Label transfer via dense scene alignment (IEEEMiami, 2009), pp. 1972–1979.

H Zhang, J Xiao, L Quan, in Proceedings of European Conference on Computer Vision. Supervised label transfer for semantic segmentation of street scenes (SpringerCrete, 2010), pp. 561–574.

J Tighe, S Lazebnik, in Proceedings of European Conference on Computer Vision. Superparsing: Scalable nonparametric image parsing with superpixels (SpringerCrete, 2010), pp. 352–365.

X Chen, Q Li, Y Song, et al, in Proceedings of European Conference on Computer Vision. Supervised geodesic propagation for semantic label transfer (SpringerFlorence, 2012), pp. 553–565.

H Zhang, T Fang, X Chen, et al, in 24th IEEE Conference on Computer Vision and Pattern Recognition. Partial similarity based nonparametric scene parsing in certain environment (IEEE Computer SocietyColorado Springs, 2011), pp. 2241–2248.

J Tighe, S Lazebnik, in IEEE Conference on Computer Vision and Pattern Recognition. Finding things: Image parsing with regions and per-exemplar detectors (IEEE Computer SocietyPortland, 2013), pp. 3001–3008.

X He, S Gould, in IEEE Conference on Computer Vision and Pattern Recognition. An exemplar-based CRF for multi-instance object segmentation (IEEE Computer SocietyColumbus, 2014), pp. 296–303.

BL Price, BS Morse, S Cohen, in The IEEE Conference on Computer Vision and Pattern Recognition. Geodesic graph cut for interactive image segmentation (IEEE Computer SocietySan Francisco, 2010), pp. 3161–3168.

D Batra, A Kowdle, D Parikh, et al, Interactively co-segmentating topically related images with intelligent scribble guidance. Int. J. Comput. Vis.93(3), 273–292 (2011).

J Wu, Y Zhao, J Zhu, et al, in IEEE Conference on Computer Vision and Pattern Recognition. Milcut: A sweeping line multiple instance learning paradigm for interactive image segmentation (IEEE Computer SocietyColumbus, 2014), pp. 256–263.

C Rother, TP Minka, A Blake, et al, in IEEE Conference on Computer Vision and Pattern Recognition. Cosegmentation of image pairs by histogram matching - incorporating a global constraint into mrfs (IEEE Computer SocietyNew York, 2006), pp. 993–1000.

S Vicente, V Kolmogorov, C Rother, in 11th European Conference on Computer Vision. Cosegmentation revisited: Models and optimization (SpringerCrete, 2010), pp. 465–479.

S Vicente, C Rother, V Kolmogorov, in The 24th IEEE Conference on Computer Vision and Pattern Recognition. Object cosegmentation (IEEE Computer SocietyColorado Springs, 2011), pp. 2217–2224.

X Liang, Y Wei, X Shen, et al, Proposal-free network for instance-level semantic object segmentation. IEEE Transaactions on Pattern Analysis and Machine Intelligence (2018). https://doi.org/10.1109/TPAMI.2017.2775623.

Y Chen, X Liu, M Yang, in CVPR. Multi-instance object segmentation with occlusion handling (IEEE Computer SocietyBoston, 2015), pp. 3470–3478.

A Levinshtein, A Stere, KN Kutulakos, DJ Fleet, SJ Dickinson, K Siddiqi, Turbopixels: Fast superpixels using geometric flows. IEEE Trans. Pattern Anal. Mach. Intell.31(12), 2290–2297 (2009).

P Arbelaez, M Maire, CC Fowlkes, J Malik, Contour detection and hierarchical image segmentation. IEEE Trans. Pattern Anal. Mach. Intell.33(5), 898–916 (2011).

PF Felzenszwalb, RB Girshick, DA McAllester, D Ramanan, Object detection with discriminatively trained part-based models. IEEE Trans. Pattern Anal. Mach. Intell.32(9), 1627–1645 (2010).

Y Boykov, O Veksler, R Zabih, Fast approximate energy minimization via graph cuts. IEEE Trans. Pattern Anal. Mach. Intell.23(11), 1222–1239 (2001).

S Goferman, L Zelnik-Manor, A Tal, Context-aware saliency detection. IEEE Trans. Pattern Anal. Mach. Intell.34(10), 1915–1926 (2012).

M Cheng, NJ Mitra, X Huang, PHS Torr, S Hu, Global contrast based salient region detection. IEEE Trans. Pattern Anal. Mach. Intell.37(3), 569–582 (2015).

A Farhadi, I Endres, D Hoiem, DA Forsyth, in 2009 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR 2009). Describing objects by their attributes (IEEE Computer SocietyMiami, 2009), pp. 1778–1785.

Q Li, X Chen, Y Song, Y Zhang, X Jin, Q Zhao, Geodesic propagation for semantic labeling. IEEE Trans. Image Process.23(11), 4812–4825 (2014).

H Riemenschneider, S Sternig, M Donoser, et al, in Proceedings of European Conference on Computer Vision. Hough regions for joining instance localization and segmentation (SpringerFlorence, 2012), pp. 258–271.

M Andriluka, B Schiele, S Roth, in the IEEE Conference on Computer Vision and Pattern Recognition. People-tracking-by-detection and people-detection-by-tracking (IEEE Computer SocietyAnchorage, 2008), pp. 2759–2766.

C Yan, Y Zhang, J Xu, F Dai, L Li, Q Dai, F Wu, A highly parallel framework for HEVC coding unit partitioning tree decision on many-core processors. IEEE Signal Process. Lett.21(5), 573–576 (2014).

CC Yan, Y Zhang, J Xu, F Dai, J Zhang, Q Dai, F Wu, Efficient parallel framework for HEVC motion estimation on many-core processors. IEEE Trans. Circ. Syst. Video Techn.24(12), 2077–2089 (2014).

Acknowledgements

The authors would like to thank the editors and anonymous reviewers for their valuable comments.

Funding

This work is supported by National Natural Science Foundation of China (61502036), the General Project of Scientific Research Project of the Beijing Education Committee(KM201611417015), and Open Funding of Beijing Key Laboratory of Information Service Engineering (Zk20201502).

Availability of data and materials

Not applicable.

Author information

Authors and Affiliations

Contributions

QL generated the idea of this work and discussed with the other two authors. QL carried out the main experiments and wrote the manuscript. AL and HL read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Li, Q., Liang, A. & Liu, H. Hierarchical semantic segmentation of image scene with object labeling. J Image Video Proc. 2018, 15 (2018). https://doi.org/10.1186/s13640-018-0254-1

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13640-018-0254-1